DevOps tools to manage your infrastructure

In this article I explore some DevOps tools to manage your infrastructure and make your life easier with automatization

If you are a System Administrator I've got some news for you:.... you are not anymore! Just so you know, your job specification has now changed, and you'd be pleased to know that they are tons of tools around that you need to master which will make your job easier, and effectively will change your job title from "System Administrator" to "DevOps Engineer". We'll explore in this article some of the tools that you need to know. The world of IT is always evolving and changing, therefore your knowleage and skills must be updated too.

On your environment, create a CentOS7 VM and called it "devops". That is the VM we are going to use to start using some devops tools

To install Ansible in your management Linux CentOS VM, execute the following:

sudo yum install ansibleIf you don't have DHCP, then ensure that all the machines that you want to manage in your network have an entry under /etc/hosts

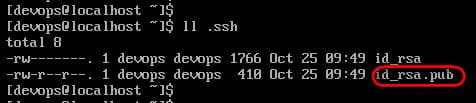

While logging as the "devops" user in your Ansible Management VM (the "devops" user needs to be a LOCAL ADMIN, this will be our specifc ansible user), issue the command ssh-keygen to generate the SSH keys, accepting the default location to save the private and the public key files; do not enter a passphrase at this stage, this is something that we probably will do it later. The SSH KeyGen is generated so that we logon automatically on the client, that's the whole point of automation

(notice the hidden folder ".ssh" where all the keys are stored) Then ensure only the public key "id_rsa.pub" and NOT the private id_rsa file is copied across the machines that you want to manage, in the example below it will be copied to the computer "computer1"

devops@localhost$ ssh-copy-id -i .ssh/id_rsa.pub computer1 #use -i to specify the identity file, the key will be copied to the devops accounts under the target computer

devops@localhost$ ssh-copy-id -i .ssh/id_rsa.pub admin@computer1 #use this method if you want to copy the key to the local "admin" account on the target machine

ssh-copy-id -i .ssh/id_rsa.pub root@client_machineRemember that the public key will be copied under the username that you use to send it, and that the username needs to be a LOCAL ADMINISTRATOR in the target machine

- If you get the error "port 22: Connection refused", go ahead an install openssh-server in the target machine

Now ensure that the user can escalate privileges in the target client computer by editing the sudoers file:

sudo visudo #open the /etc/sudoers file and add this line to the bottom of it:

devops ALL=(ALL) NOPASSWD: ALL

#this will ensure that the 'devops' user can escalate without asking for a passwordThat's it, that is all you need to do to ensure the client computer is ansible aware and ready to go

In your Ansible Management Linux VM, edit the file /etc/ansible/hosts and create some groups to manage your target computers, notice that machines can be in more than one group. Visit ANSIBLE documentation in this link for further info about inventory: https://docs.ansible.com/ansible/latest/user_guide/intro_inventory.html in case you need to change the ssh port that ansible uses (22) or the target machine name, etc

[Production_Group]

computer1

computer2 ansible_user=admin

computer3

[Lab_Group]

computer[20:30] #includes computer20, computer21, etc till 30

192.168.1[20-30] #includes all machines within the range of IPsAfter you have done that, run this command and you should received a successful ping-pong, oh yeah! That will work as long as you use the same account from your DEVOPS VM to the Target Machines ( on my example I used the account devops

ansible -m ping all #check the connection with your target machines

Other commands that you can do are:

ansible -m raw -a '/usr/bin/uptime' all #shows you the uptime of your target machines

ansible all -a 'uptime' #same as above, ansible will take the (a)action to run uptime

ansible -m shell -a 'python -V' all #use the shell (m)module to enquiry the version of python running

ansible all -b -m service -a 'name=splunk state=started' #ensures that the Splunk service is runningTo test the escalation of privileges, issue this command, where the -b switch stands for become (so you become root) and the -m specified the module that you're going to use for commands operations

ansible all -b -a 'whoami'

ansible all -a 'uptime'

ansible client2 -b -a 'shutdown -r'

ansible all -b -m apt -a 'name=apache2 state=present' ;ensures all Ubuntu have apache2

ansible all -b -m yum -a 'name=httpd state=latest' ;ensures all CentOS have the latest apache installedA bit of theory now. Ansible can work at 3 x different levels, from the safest (the default) to the safer and ending at your own risk; what you want to do is to use always the safest (and easier) level as possible when permitted

- Command (default) ;does not use the shell at all, and therefore cannot use pipes and redirectes

- Shell ;it supports pipes and redirects, and can get tricky with the user bash settings, etc

- Raw ;it just send the commands over ssh and does not need python, to be use in routers and devices where you can't install python

If you want, you can avoid typing "-m command" when issuing command in Ansible, as the command module is use by default. The below command "redirects" the creation of a file with the word "hello" on it. This is done thanks to the "-m shell" command, as it support direction, the "-m raw" will do exactly the same as long as the end client device supports the redirect.

ansible myGroup -b -m shell -a 'echo "hello" > /root/hello.txt'

So far we have been using Ansible on ad-hoc mode, easy to setup but it comes with limitations. With Ansible Playbooks, in the other hand, you can use more complex stuff like Format and Functions commands. Ansible Playbook uses YAML (yet-another-markup-language) to drive its engines; this language is very sensitive to spaces and tabs, so just ensure you double and triple check your commands

ansible-playbook -v test.yaml ;executes the playbook test.yaml on verbose modeWe are going to use Ansible on ad-hoc, which has some limitations but it a great way to start with the flavour and test the powerful things that ansible can do

The following uses the module copy to (you guessed right!) copy a file to the computers that are part of an ansible inventory group

ansible myGroup -b -m copy -a 'src=/etc/hosts dest=/etc/hosts'The following is a playbook file example, note that *.yaml files starts with 3 dash --- .You can use the string either "yes" or "true" for the become command

--- #every ansible playbook file must start with 3 dashs, and ending with 3 --- if you liked to

- hosts: myGroup #hosts declarations must start with a dash -

become: yes

tasks:

- name: do a uname

shell: uname -a > /home/test.txt

-name: whoami

shell: whoami >> /home/test.txt

The following is a playbook that includes handlers (the handler is "vsftpd"), remember that handlers run only once unless the yaml file reports changes while running the tasks. Note that the 3 dashs are ALWAYS compulsory, but I have ommited them for the sake of simplicity

- hosts: myGroupLinux1

become: yes #you need to become root to install packages

tasks:

-name: install vsftpd on ubuntu

apt: name=vsftp update_cache=yes state=latest #update cache upgrade the OS

ignore_errors: yes #on ad-hoc it will continue but on playbook add this line

notify: start vsftpd

-name: install vsftpd on CentOS

yum: name=vsftp state=latest

ignore_errors: yes

notify: start vsftpd

handlers

-name: start vsftpd #the name must match with the "notify" name

service: name=vsftpd enable=yes state=started #enable on boot and then starts the serviceThe following is a playbook example that shows the use of some variables

-hosts: SomeLinuxGroup

vars: #have done this on purpose, this "vars" will give you a sintax error because it needs an extra space to be inline just below the "h" of hosts

-var1: this is a piece of string

-var2: this is another piece of string

tasks:

-name: echo stuff

shell: echo "{{var1}} is var, yet var2 is {{var}} > /home/admin/Desktop/test.txt

Docker is an orquestator for Kubernetts

Docker is like virtualisation within virtualisation. On an hypervisor model, your virtualise the hardware with an "hypervisor", and then you virtualise the Operating System inside the hypervisor. In Docker, in the other hand, you deploy just the visualise application, only with the absolute minimum to run, in what is called a container. Docker CLI is the way to administer and managing your containers.

Docker is native to Linux, and to install it on an Ubuntu machine, do as follows:

sudo apt-get update

#install the dependencies below

sudo apt-get install apt-transport-https ca-certificates curl

sudo apt-get install gnupg-agent software-properties-common

#add the Docker repository

curl -fsSL https://download.docker.com/linux/ubuntu/gpg |sudo apt-key add -

sudo add-apt-repository \

"deb [arch=amd64] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable"

#finally, install Docker Community Edition (ce)

sudo apt-get install docker-ce docker-ce-cli containerd.io

Similar to GitHub, you can publish docker containers and make it public https://hub.docker.com/ Unless you create one, you'll get most of your containers from Docker Hub

sudo docker run hello-world #download Hello-World from Docker Hub

sudo docker run hello-world #runs an docker bash in ubuntu

sudo docker images

sudo docker run -it --rm --name nyancat 06kellyjac/nyancat

PowerShell

Do not understimate the power of PowerShell, this great command line is base on object-references instead of text-references like for example Bash is. Here some handy tips if you are just starting with PS:

Get-Verb

#list the PS approved verbs for cmdlets

Use these 3 core cmdlets to delve into what cmdlets can do for you:

Get-Command

Get-Help

Get-MemberTo search for the thousands of available commands in PS, filter your search by using the -Verb or the -Noun flags:

- Get-Command -Verb Ge -Noun alias

- Get-Command -Noun file*

"PowerShell isn't something you learn overnight; it's learned command by command. You can speed up your learning by effectively using the core cmdlets."

Meetups in London

- AWS User Group UK

- Ansible London

- DevOps Underground

- London DevOps

- Cloud Native London

- DevOps Playground London

- Linuxing in London

- DevOps Exchange

- London uServices (Microservices) user group

- London Continuous Delivery

- QuruLabs - Somerset House (Linux and Open Source)

- GDG Cloud London

- IBM Cloud - London

- London Puppet User Group

This article is yet to be completed

London, November 2021